The drawn-out negotiations over revamping Colorado’s artificial-intelligence regulations have hit another stumbling block — a proposed liability amendment introduced Sunday that AI-system developers worry could mire them unfairly in legal action.

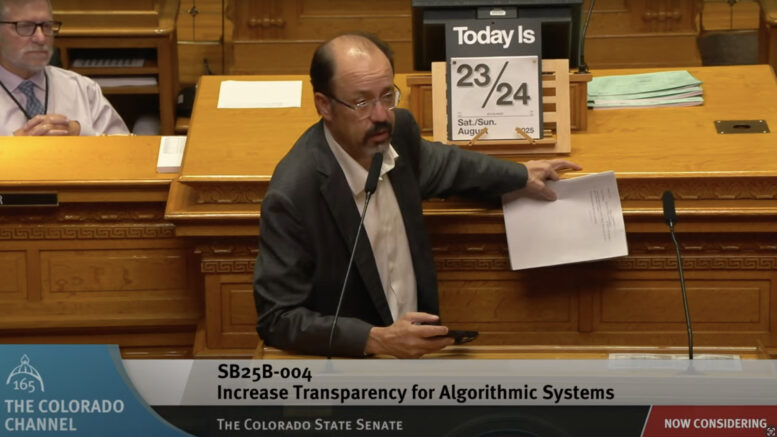

Legislators, in addition to seeking to close a $783 million budget shortfall, are debating in special session how to roll back rules in a 2024 law that technology leaders have said are so unworkable that they could lead to an exodus of AI developers from Colorado when they go into effect in February. Two bills seek to tackle the issue — one from the original law’s sponsor that would scrap some disclosure requirements but boost protections in other ways and the other from bipartisan sponsors that sought to simplify rules so much that it worried consumer groups.

Both bills have undergone major changes since clearing their first committees on the opening day of the session Thursday. Sponsors of the bipartisan bill, House Bill 1008, gutted its regulatory-change provisions and replaced them only with an extension of the effective date for the 2024 law until October 2026, which they say will give legislators the full 2026 session to debate needed reform.

Senate Majority Leader Robert Rodriguez, the Denver Democrat behind the 2024 law and Senate Bill 4, on Sunday pulled from SB 4 a requirement that deployers provide individuals who face adverse decisions from AI programs a list of the 20 personal characteristics of those people that most influenced the decision. Facing criticism that the provision was unworkable, Rodriguez replaced it with language requiring AI deployers to provide affected individuals, upon request, with a general description and list of personal characteristics that most substantially influenced the decision.

A sticky liability provision

However, Rodriguez also added to the bill during debate on the Senate floor an amendment establishing joint and several liability for both developers and deployers were the AI system be found to be violating Colorado antidiscrimination or consumer-protection law. And while he subsequently pulled the provision out of the bill in order to continue negotiating it with business, consumer and labor groups, it’s clear that the issue of liability signals the newest roadblock to achieving any sort of consensus.

SB 4 originally included a provision that could allow lawsuits against both developers and deployers of systems that tech leaders said could generate liability for developers even if they were not responsible for deployments over which they have no control. But that provision offered several safe harbors, stating a developer would not be jointly and severally liable if they took all reasonable steps available to prevent misuse and if they did not intend and could not have reasonably foreseen the misuse.

The new provision, however, would remove the specific safe-harbor provisions and would state instead that developers are jointly and severally liable with the deployer if:

- The deployer of the AI system used the system for its intended or advertised purpose;

- The deployer used the AI system in a manner intended or reasonably anticipated by the developer; or,

- The output of the AI system was not materially altered by data that the deployer provided and that involved the deployer’s independent judgment or discretion.

It then states further that a person held jointly and severally liable under this provision has a right of contribution from another person found liable and that a court shall determine the percentage of fault attributable to the developer and deployer.

Tech leaders fear impact on artificial-intelligence sector

Leaders of a coalition negotiating for business interests — including the Colorado Chamber of Commerce, the Colorado Technology Association and TechNet — warned this shift in liability could go as far as to inhibit new or existing AI development in Colorado.

It could subject developers, for example, to liability for a business using AI systems in making hiring decisions, despite developers not making employment decisions or having sufficient visibility into customer data necessary to determine whether an employment decision violates the Colorado Anti-Discrimination Act, they argued. And it could encourage deployers to outsource discrimination decisions to software vendors who don’t make decisions governed by anti-discrimination laws.

“One of the big concerns we have heard from developers and deployers is that they both very much want to be responsible for the parts they have control over,” said Rachel Beck, executive director of the Colorado Chamber Foundation, in testimony Thursday. “Developers are building systems, and they don’t have control over customizations of the folks they sell to. Deployers are customizing systems, but they don’t set up the framework. And we think there’s some additional work to do to ensure that assessing specific role responsibility is happening so that liability achieves the goal of the legislation.”

Why both sides are digging in their heels

Rodriguez told the Senate Business, Labor and Technology Committee on Thursday that he wants to add joint and several liability to the law because deployers who may be accused of discrimination may not know everything about the AI system they are buying. And he said when introducing the liability amendment on Sunday that the provision still allows individual liability if a developer can prove they did nothing wrong.

In questioning Adams Price, chairman of the CTA board of directors, about liability during Thursday’s committee hearing, Rodriguez said he is concerned people otherwise will sue deployers of AI systems when it’s the developer’s system that led to biased decisions.

“If your AI is used and you developed it and it isn’t modified — and it’s used as you intended — and it creates a harm, should you have liability?” Rodriguez asked.

Responded Adams: “Someone should have liability. I don’t think both should have liability … As a developer, I don’t have a relationship with a consumer. I don’t have a relationship with someone who’s directly using that system. And so, I don’t have visibility directly into that. There should be liability. But I don’t believe in that instance, the developer should have joint and several liability.”

Artificial-intelligence debate extending length of special session

While the Legislature already has passed and dispatched several revenue-related bills to Gov. Jared Polis, the two AI-regulatory bills have been moving much slower through the process as their sponsors engage in constant negotiations.

Rodriguez had seemed to allude during discussion of SB 4 on the Senate floor late Sunday that he’d gotten the bill to the place where he was ready to move forward with it. However, he then pulled the successful liability amendment off the bill, as he said he needed to continue negotiating it. And the bill, which received preliminary approval Sunday and was slated to go for a final vote in the Senate on Monday morning, has been delayed on the calendar again.

It’s not just business interests who have expressed concern about the impact of a joint-and-several-liability provision in the bill. Amy Bhikha, chief data officer for the governor’s Office of Information Technology, told the Senate committee that the provision also could create “substantial legal liability” for the state if there is misuse by vendors of an AI system.

In order for either SB 4 or HB 1008 to pass, the special session must continue through at least Tuesday or possibly longer.